|

My primary areas of research are philosophy of psychology (including perception and social cognition), philosophy of mind, philosophy of science, and philosophy of technology. My work in these areas explores the nature and structure of bias and purported cases of implicit content---e.g., transformation principles within the visual-perceptual system, computational processes in socio-cognitive systems, scientific inference, and predictive models in artificial intelligence programs---as well as the implications the possibility of implicit content has for computational theories of mind more generally.

You can read more about my research below or by visiting my PhilPapers profile. |

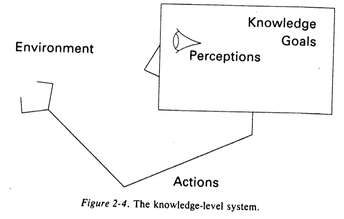

From Newell's Unified Theories of Cognition, 1994 |

What is a bias? Standard philosophical views of both implicit and explicit bias focus this question on the representations one harbors, e.g., stereotypes or implicit attitudes, rather than the ways in which those representations (or other mental states) are manipulated. I call this approach representationalism. In this paper, I argue that representationalism taken as a general theory of psychological social bias is a mistake because it conceptualizes bias in ways that do not fully capture the phenomenon. Crucially, this view fails to capture a heretofore neglected possibility of bias, one that influences an individual's beliefs about or actions toward others, but is, nevertheless, nowhere represented in that individual's cognitive repertoire. In place of representationalism, I develop a functional account of psychological social bias that characterizes it as a mental entity that takes propositional mental states as inputs and returns propositional mental states as outputs in a way that instantiates social-kind inductions. This functional characterization leaves open which mental states and processes bridge the gap between the inputs and outputs, ultimately highlighting the diversity of candidates that can serve this role.

Algorithmic Bias: on the Implicit Biases of Social Technology, 2020, Synthese, https://doi.org/10.1007/s11229-020-02696-y

Often machine learning programs inherit social patterns reflected in their training data without any directed effort by programmers to include such biases. Computer scientists call this algorithmic bias. This paper explores the relationship between machine bias and human cognitive bias. In it, I argue similarities between algorithmic and cognitive biases indicate a disconcerting sense in which sources of bias emerge out of seemingly innocuous patterns of information processing. The emergent nature of this bias obscures the existence of the bias itself, making it difficult to identify, mitigate, or evaluate using standard resources in epistemology and ethics. I demonstrate these points in the case of mitigation techniques by presenting what I call 'the Proxy Problem'. One reason biases resist revision is that they rely on proxy attributes, seemingly innocuous attributes that correlate with socially-sensitive attributes, serving as proxies for the socially-sensitive attributes themselves. I argue that in both human and algorithmic domains, this problem presents a common dilemma for mitigation: attempts to discourage reliance on proxy attributes risk a tradeoff with judgement accuracy. This problem, I contend, admits of no purely algorithmic solution.

The Psychology of Bias, 2020, in An Introduction to Implicit Bias: Knowledge, Justice, and the Social Mind, Erin Beeghly and Alex Madva (eds.), Routledge, Penultimate Draft

What’s going on in the head of someone with an implicit bias? Psychological and philosophical attempts to answer this question have centered on one of two distinct data patterns displayed in studies of individuals with implicit biases: divergence and rational responsiveness. However, explanations focused on these different patterns provide different, often conflicting answers to the question. In this chapter, I provide a literature review that addresses these tensions in data, method, and theory in depth. I begin by surveying the empirical data concerning patterns of divergence and rational responsiveness. Next, I review the psychological theories that attempt to explain these patterns. Finally, I suggest that tensions in the psychological study of implicit bias highlight the possibility that implicit bias is, in fact, a heterogeneous phenomenon, and thus, future work on implicit bias will likely need to abandon the idea that all implicit biases are underwritten by the same sorts of states and process.

Are Algorithms Value-Free? Feminist Theoretical Virtues in Machine Learning, 2023, Journal of Moral Philosophy, https://doi.org/10.1163/17455243-20234372

As inductive decision-making procedures, the inferences made by machine learning programs are subject to underdetermination by evidence and bear inductive risk. One strategy for overcoming these challenges is guided by a presumption in philosophy of science that inductive inferences can and should be value-free. Applied to machine learning programs, the strategy assumes that the influence of values is restricted to data and decision outcomes, thereby omitting internal value-laden design choice points. In this paper, I apply arguments from feminist philosophy of science to machine learning programs to make the case that the resources required to respond to these inductive challenges render critical aspects of their design constitutively value-laden. I demonstrate these points specifically in the case of recidivism algorithms, arguing that contemporary debates concerning fairness in criminal justice risk-assessment programs are best understood as iterations of traditional arguments from inductive risk and demarcation, and thereby establish the value-laden nature of automated decision-making programs. Finally, in light of these points, I address opportunities for relocating the value-free ideal in machine learning and the limitations that accompany them.

Unconscious Perception and Unconscious Bias: Parallel Debates about Unconscious Content, 2023, in Oxford Studies in Philosophy of Mind, vol. 3, Uriah Kriegel (ed.), Penultimate Draft

The possibilities of unconscious perception and unconscious bias prompt parallel debates about unconscious mental content. This chapter argues that claims within these debates alleging the existence of unconscious content are made fraught by ambiguity and confusion with respect to the two central concepts they involve: consciousness and content. Borrowing conceptual resources from the debate about unconscious perception, the chapter distills the two conceptual puzzles concerning each of these notions and establishes philosophical strategies for their resolution. It then argues that empirical evidence for unconscious bias falls victim to these same puzzles, but that progress can be made by adopting similar philosophical strategies. Throughout, the chapter highlights paths forward in both debates, illustrates how they serve as fruitful domains in which to study the relationship between philosophy and empirical science, and uses their combined study to further understanding of a general theory of unconscious content.

The (Dis)Unity of Psychological (Social) Bias, 2024, Philosophical Psychology, https://doi.org/10.1080/09515089.2024.2366418

This paper explores the complex nature of social biases, arguing for a functional framework that recognizes their unity and diversity. The functional approach posits that all biases share a common functional role in overcoming underdetermination. This framework, I argue, provides a comprehensive understanding of how all psychological biases, including social biases, are unified. I then turn to the question of disunity, demonstrating how psychological social biases differ systematically in the mental states and processes that constitute them. These differences indicate that biases at various levels of the cognitive architecture require distinct treatment along at least two dimensions: epistemic evaluation and mitigation strategies. By examining social biases through this dual lens of unity and diversity, we can more effectively identify when and how to intervene on problematic biases. Ultimately, this approach provides a nuanced understanding of the nature of social bias, offering practical guidance for addressing existing biases and proactively managing emerging biases in both human and artificial minds.

Varieties of Bias, 2024, Philosophy Compass, https://doi.org/10.1111/phc3.13011

The concept of bias is pervasive in both popular discourse and empirical theorizing within philosophy, cognitive science, and artificial intelligence. This widespread application threat- ens to render the concept too heterogeneous and unwieldy for systematic investigation. The primary aim of this article is to argue for the feasibility of identifying a single theoretical category—termed ‘bias’—that could be unified by its functional role across different contexts. To achieve this aim, the article provides a comprehensive review of theories of bias that are significant in the fields of philosophy of mind, cognitive science, machine learning, and epistemology. It focuses on key examples such as perceptual bias, implicit bias, explicit bias, and algorithmic bias, scrutinizing their similarities and differences. Although it may not conclusively establish the existence of a natural theoretical kind, pursuing the possibility offers valuable insights into how bias is conceptualized and deployed across diverse domains, thus deepening our understanding of its complexities across a wide range of cognitive and computational processes.

Works in Progress:

Drafts Available Upon Request

The Hard Proxy Problem: Proxies Aren't Intentional, They're Intentional

This paper concerns the Proxy Problem: often machine learning programs utilize seemingly innocuous features as proxies for socially-sensitive attributes, posing various challenges for the creation of ethical algorithms. I argue that to address this problem, we must first settle a prior question of what it means for an algorithm that only has access to seemingly neutral features to be using those features as “proxies” for, and so to be making decisions on the basis of, protected-class features. Borrowing resources from philosophy of mind and language, I argue the answer depends on whether discrimination against those protected classes explains the algorithm’s selection of individuals. This approach rules out standard theories of proxy discrimination in law and computer science that rely on overly intellectual views of agent intentions or on overly deflationary views that reduce proxy use to statistical correlation. Instead, my theory highlights two distinct ways an algorithm can reason using proxies: either the proxies themselves are meaningfully about the protected classes, highlighting a new kind of intentional content for philosophical theories in mind and language; or the algorithm explicitly represents the protected-class features themselves, and proxy discrimination becomes regular, old, run-of-the-mill discrimination.

What Bias Explains: A Philosophical Perspective on Bias in Empirical Psychology

This article reevaluates the concept of bias in social psychology, critiquing the emerging behavioral trend spearheaded by Jan De Houwer and Bertram Gawronski. It argues that defining bias solely as a behavioral phenomenon, without reference to mental states and processes, falls short of the explanatory goals of empirical psychology. The paper highlights the limitations of the behaviorist arguments, including concerns about resistance to bias being in the head, challenges in measuring mental constructs compared to behavior, and the circularity of explanations involving bias as a mental construct. Advancing an alternative, I offer a psychological account of bias that reconciles its status as both functional and cognitive. This approach aligns our concept of bias with broader computational theory, including vision science and ma- chine learning, as well as enhances current empirical methods for its study. The piece concludes demonstrating this point, by gesturing at the implications of this cognitive approach on ongoing debates around unconscious bias.

This paper examines the increasing prevalence of Large Language Models like GPT and PaLM in our daily lives, raising critical questions about whether these AI systems truly understand human languages or merely mimic them. It argues that the current trajectory of AI in linguistic tasks is creating more convincing illusions of communication rather than the genuine article. By comparing the development, goals, and structure of AI systems with human cognitive processes, the paper highlights the stark contrast between AI's domain-general statistical approach and human minds' focus on causal-explanatory understanding. Drawing from cognitive science research, it suggests that the internal representational capacities of AI systems significantly differ from human cognition. This leads to critical miscommunications when, for example, we take an algorithm’s use of some concept like recidivism risk to be the same as our own and base real-world decisions on their predictions using those concepts. This suggests that successful communication between humans and machines will not be possible without radical changes to the development, structure, and goals of artificial systems.

Thinking Outside the Black Box: the vertical proxy problem for AI

Coauthored with Katrina Elliott (Brandeis)

Coauthored with Katrina Elliott (Brandeis)

Through an unimaginably efficient process of trial and error, machine learning programs exploit previously unknown or unusable correlations to make accurate predictions much more efficiently than humans and human-written algorithms. Despite their utility, the use of ML algorithms is controversial. Many authors have argued that ML predictive models are worryingly opaque; ML algorithms are typically too complex and too gerrymandered for humans to comprehend. On the other hand, we have always had to make do with epistemically opaque scientific methods. Is it any more worrying if we can’t say why a ML program is making the decision it is so long as we can still be confident? Yes, we argue. ML algorithms set the stage for a new kind of proxy problem that conflates levels of explanation: ML algorithms are able to detect low-level proxies of higher-level phenomena that would otherwise be invisible to humans, and to project potential correlations among these low-level proxies that are accidental, misleading, and problematic. Our research into this unique kind of proxy problem will unearth interesting morals for philosophers of science and social ontologists, as well as for ethicists, political philosophers, policy makers, and activists.